HEP-CCE Activities

The HEP-CCE comprises the following four technical teams.

Portable Parallelization Strategies for High-Performance Computing Systems

High-energy physics (HEP) experiments have developed millions of lines of code over decades that are optimized to run on traditional x86 CPU systems. The CCE Portable Parallelization Strategies (PPS) team will help define strategies to prioritize codes to parallelize and determine how to parallelize these codes in a portable fashion so that the same code base can run on multiple architectures with few or no changes.

Fine-Grained I/O and Storage on HPC Platforms, including Data Models and Structures

HEP experiments rely on file-based input/output (I/O) and storage to process hundreds of petabytes of data every year. The CCE Fine-Grained I/O and Storage (IOS) team will help optimize I/O performance at scale on U.S. Department of Energy high-performance computing systems by proposing fine-grained parallel I/O and storage solutions. In collaboration with PPS, this team will design data models that map efficiently to memory constructs.

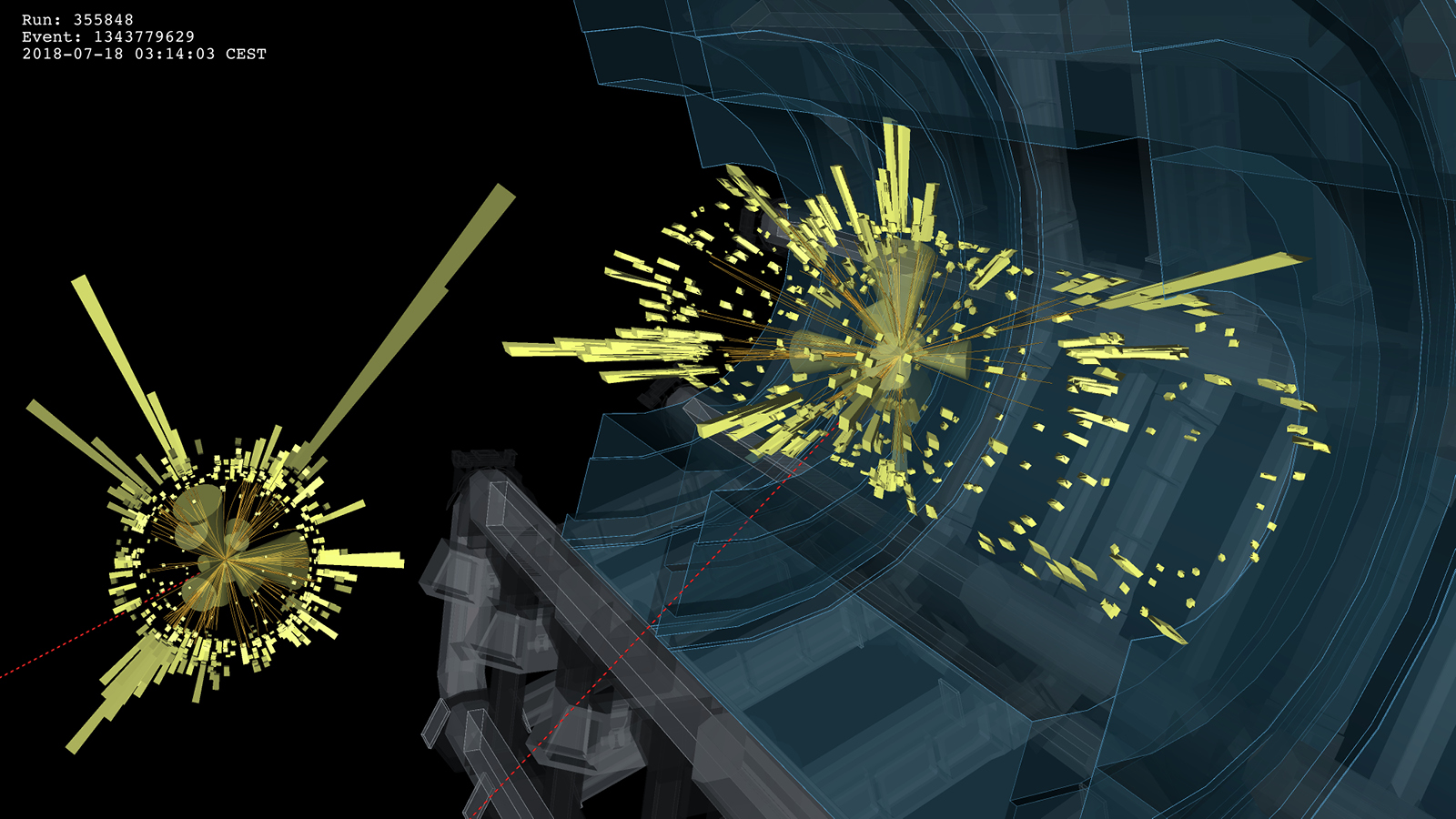

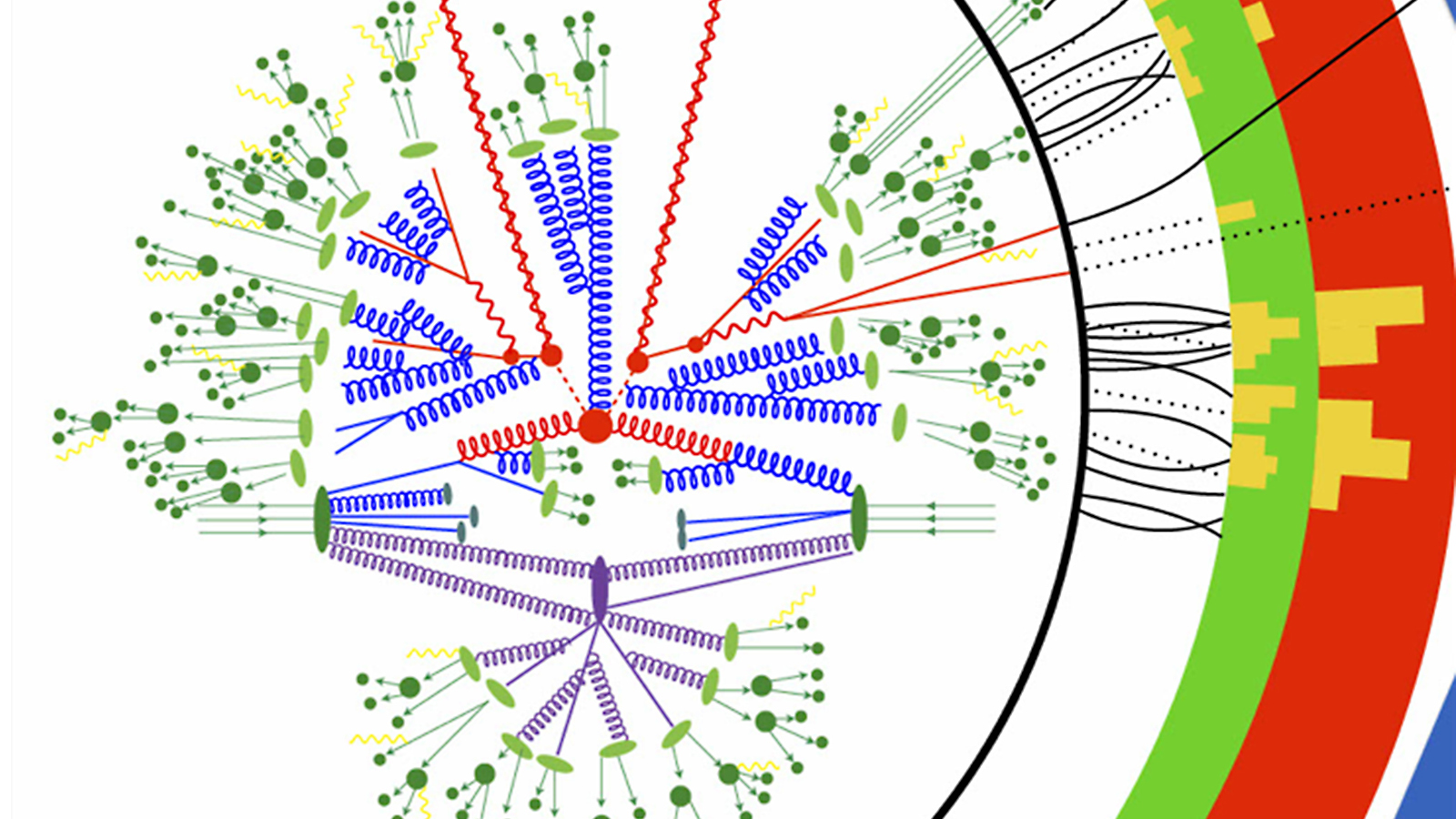

Event Generators on High-Performance Computing Systems

Monte Carlo simulation of particle collisions (event generation) will consume a significant fraction of computational resources at CERN’s High-Luminosity Large Hadron Collider. The CCE Event Generators (EG) team will develop, from scratch, a parallel matrix-element generator that runs on new and traditional architecture. This team will coordinate with the HEP Software Foundation EG group and efforts worldwide. In collaboration with PPS, it will explore multiple portable parallelization strategies for that.

Complex Workflows on High-Performance Computing Systems

High-performance computing (HPC) systems and their software stacks are not optimized for complex, dynamic workflows such as cosmology data pipelines and particle physics uncertainty quantification studies. Conversely HEP distributed workflow management systems are not optimized for execution on a single HPC system. The CCE Complex Workflows (CW) team will identify requirements for production and user-focused workflows, components, and execution environments. It will then develop HPC, container, and data scheduling models for improving performance in combination with node-level parallelization.