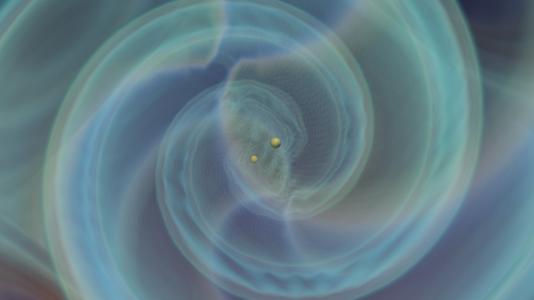

Gravitational waves are ripples in spacetime generated by extremely energetic cosmic events, such as the collision of black holes and neutron stars. These waves of undulating spacetime propagate away from these cataclysmic events, traveling unimpeded throughout the universe at the speed of light, carrying unique insights about the astrophysical properties of their sources.

Since 2015, the Laser Interferometer Gravitational-wave Observatory (LIGO) and its international partners have detected nearly 100 gravitational wave sources. This wealth of information will shed new light on the astrophysical processes that enable the existence of observed black holes and neutron stars.

Realizing this science depends critically on the algorithms we use to extract new knowledge from experimental data. An international team of scientists, led by the U.S. Department of Energy’s Argonne National Laboratory, has developed novel artificial intelligence (AI) methods that reduce time-to-insight from hours to seconds compared to state-of-the-art algorithms for gravitational wave data analysis, while also enabling new physics that is beyond the scope of traditional methods.

This study shows that combining black hole physics, advances in AI, and extreme scale computing enables the creation of AI models that learn and describe the physics of gravitational waves that describe binary black hole mergers.

The modeled waveforms used by scientists include higher order modes, which are similar to musical overtones. This additional information is critical to reveal new insights about black hole physics. In the same way the overtones of a piano sound different to those of a violin, higher order wave modes help measure with exquisite precision the individual spins of binary black holes, in particular when the binary components of black hole mergers have disparate masses––a feat that is beyond the capabilities of state-of-the-art gravitational wave data analysis methods, including Bayesian inference, a method whose key principle is to learn from experience (i.e., a number of hypothesis are considered at first, and as more data become available, the probability for each hypotheses is updated. In time, hypotheses that best describe the data are identified as the most probable description of a given phenomenon).

By going above and beyond the capabilities of Bayesian methods, the newly developed AI is enabling faster and better science, taking scientists into new, uncharted territory that is ripe for discovery.

Supercomputers are used to study black holes, neutron stars, and gravitational waves, phenomena that are described by Einstein’s theory of general relativity. In practice, one chooses an astrophysical scenario of interest, and then uses scientific software in supercomputers to produce large-scale simulations that shed light into the physics of such astrophysical sources.

On the other hand, one may also ask, given a numerical relativity waveform, what are the astrophysical parameters (the masses and spins of the binary components, the shape of their orbit, etc.,) that determine its shape and length? Given the importance of this problem, since we do not know a priori the nature of astrophysical sources in the universe, scientists have developed a portfolio of numerical methods to analyze datasets to address this grand challenge.

We show that AI can infer the astrophysical parameters that determine the properties of the gravitational waves that describe quasi-circular, spinning, non-precessing, binary black hole mergers. This work required the use of extreme scale computing, since AI required tens of millions of modeled waveforms to understand the physics of the problem, and training these AI models required thousands of GPUs in the Summit supercomputer to reduce time-to-insight. Furthermore, we incorporated physics and mathematical principles in the training of AI models to help AI more quickly identify the patterns and features in higher order modes that unveil the physical properties of black hole mergers.

The combination of these elements—black hole physics, AI and extreme scale computing—led to the creation of AI models that outperform a diverse set of other machine learning methods in terms of computational speed by order of magnitude. Most importantly, it enables science that is inaccessible to state-of-the-art Bayesian inference. e.g., accurately measuring the individual spin of black hole mergers whose binary components have asymmetric masses, e.g., black holes with 50 and 8 solar masses each, thus providing new insights into the formation mechanisms of black holes.

Physics-inspired AI and extreme scale computing are breaking barriers in scientific discovery at an ever-increasing pace, unveiling new frontiers of knowledge that seemed out of reach.

More Information

Asad Khan, E.A. Huerta and Prayush Kumar, AI and extreme scale computing to learn and infer the physics of higher order gravitational wave modes of quasi-circular, spinning, non-precessing black hole mergers. Physics Letters B 835, 137505 (2022)

This research was funded by the National Science Foundation and the Innovative and Novel Computational Impact on Theory and Experiment project Multi-Messenger Astrophysics at Extreme Scale in Summit. This material is based upon work supported by Laboratory Directed Research and Development funding from Argonne National Laboratory, provided by the U.S. Department of Energy Office of Science. This research used resources of the Argonne Leadership Computing Facility and Oak Ridge Leadership Computing Facility, which are DOE Office of Science User Facilities. Additional support was provided by the Department of Atomic Energy, Government of India, and by the Ashok and Gita Vaish Early Career Faculty Fellowship at the International Centre for Theoretical Sciences.

Contact

Eliu Huerta, Lead for Translational AI, Data Science and Learning Division, Argonne National Laboratory; The University of Chicago

elihu@anl.gov